I’ve been playing a lot with ArgoCD recently and decided it was time to migrate as much of my platform to being managed using GitOps as possible. Whilst it’s taken some time to get to grips with, it has enabled me to ensure all my code is committed to the relevant repositories. However, it has forced me to consider what would happen in an “extinction-level” event in the environment.

I’ve been playing a lot with ArgoCD recently and decided it was time to migrate as much of my platform to being managed using GitOps as possible. Whilst it’s taken some time to get to grips with, it has enabled me to ensure all my code is committed to the relevant repositories. However, it has forced me to consider what would happen in an “extinction-level” event in the environment.

Continue reading

Testing Access to MinIO from Kubernetes

Recently I decided to migrate my GitLab instance off a RHEL VM and across to Kubernetes. Partly to reduce the number of virtual machines running in my environment, but more so because Kubernetes is now my platform of choice for my infrastructure applications.

Recently I decided to migrate my GitLab instance off a RHEL VM and across to Kubernetes. Partly to reduce the number of virtual machines running in my environment, but more so because Kubernetes is now my platform of choice for my infrastructure applications.

However, post-upgrade I had issues storing files, and had Continue reading

Perform a Rolling Upgrade of HashiCorp Vault in Kubernetes

Recently I demonstrated how to install HashiCorp Vault on Kubernetes using Helm. Whilst it’s great to get up and running, upgrades are also an important part of application lifecycle management. In this post I’ll demonstrate how we can use rolling upgrades to bring Vault to the latest version, along with any plugins you may be using in your infrastructure.

Recently I demonstrated how to install HashiCorp Vault on Kubernetes using Helm. Whilst it’s great to get up and running, upgrades are also an important part of application lifecycle management. In this post I’ll demonstrate how we can use rolling upgrades to bring Vault to the latest version, along with any plugins you may be using in your infrastructure.

Migrating HashiCorp Vault to Kubernetes using Helm

I’m a big fan of Kubernetes. It has taken a while to get my head around it, and deploying/operating it every day has helped me on my path to mastering it. As part of that journey, I decided that the HobbitCloud infrastructure would become “Kubernetes first”, and as much of it would be migrated as possible. The first item was Vault. Continue reading

I’m a big fan of Kubernetes. It has taken a while to get my head around it, and deploying/operating it every day has helped me on my path to mastering it. As part of that journey, I decided that the HobbitCloud infrastructure would become “Kubernetes first”, and as much of it would be migrated as possible. The first item was Vault. Continue reading

Wednesday Tidbit: Fix Container Service Extension 4.1 Permissions Issue

A few weeks ago I upgraded Cloud Director CSE from 4.0.3 to 4.1. However, when I tried to deploy a new Kubernetes 1.25 cluster, I received the following error:

For this particular tenant, I clone with OrgAdmin role to a new one (OrgAdmin w/ Kubernetes) and then add the permissions of the Cluster Author role.

However, the 4.0.3 combination of this was missing a required permission for 4.1 – hence giving me the above error. After comparing my amalgamated role with the Cluster Author role, I spotted it was missing this:

After adding this permission to my “OrgAdmin w/ Kubernetes” role I was able to recreate CSE clusters.

And…. we’re back!

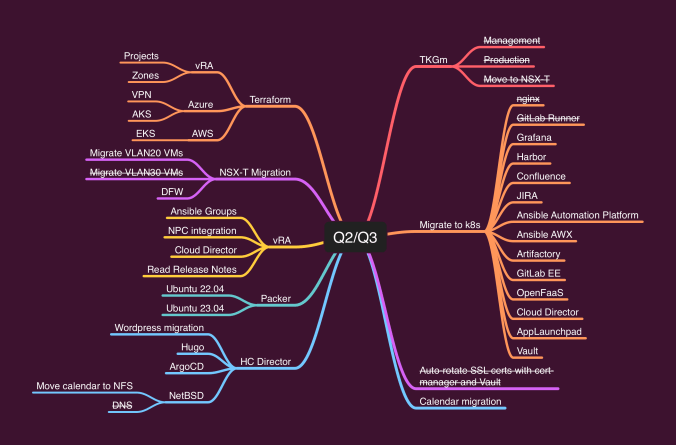

Mindmaps are useful

This past year has unfortunately not been a productive one for the blog. Daily work, a lab in a perpetual state of disrepair, and family constraints have conspired to keep me producing content.

Well, today I’m back, and with a plethora of ideas and projects to share!

Over the coming weeks, I plan a major overhaul of the lab, and of my on-premises tenant that hosts my private workloads.

One of these will be to move my blog from hosted WordPress to Kubernetes on-prem. The second part of the migration will be to Hugo.

So… lots to come. Stay tuned!

Back to Basics: Using Postman’s Environments and Tests with vRealize Automation

Most of the time I work with the vRealize Automation API through vRO Actions. Occasionally I have the need to make a change to the system which requires using the API. One such example is modifying the session timeout, as written by Gary at https://garyflynn.com/post/vrealize-automation-85-increase-session-timeout. Continue reading

Most of the time I work with the vRealize Automation API through vRO Actions. Occasionally I have the need to make a change to the system which requires using the API. One such example is modifying the session timeout, as written by Gary at https://garyflynn.com/post/vrealize-automation-85-increase-session-timeout. Continue reading

Securing nginx on VMware TKGm with the NSX Advanced Load-Balancer and HashiCorp Vault

I’ve been running VMware’s Tanzu Kubernetes Grid in HobbitCloud for quite a while. It’s an easy way for me to consume Kubernetes, which I use for demonstrating containerised workload connectivity between clouds. I also deploy container workloads direct from vRealize Automation to TKGm clusters. Continue reading

I’ve been running VMware’s Tanzu Kubernetes Grid in HobbitCloud for quite a while. It’s an easy way for me to consume Kubernetes, which I use for demonstrating containerised workload connectivity between clouds. I also deploy container workloads direct from vRealize Automation to TKGm clusters. Continue reading

Wednesday Tidbit: Enable Basic Auth in NSX-ALB (Avi) to enable Tanzu Kubernetes Grid

For a while now I’ve been a big fan of VMware’s Tanzu Kubernetes Grid Integrated, formally Pivotal’s Kubernetes Service. However whilst great in the beginning, newer technologies such as Cluster API have overtaken things like Bosh.

Whilst it could be frustratingly difficult to setup, VMware made serioues efforts to simplify this with solutions such as the Management Console. However after recently struggling with this and trying to create NSX-T Principal Identity certificates during setup, I decided it was time to walk away and go “all in” with TKG.

Tanzu Kubernetes Grid

After the initial fiddly bits (in my case, getting Docker to work nicely on Ubuntu), firing up the management cluster using the UI was trivially easy.

I first opted for using kube-vip as my control plane endpoint provider. After a sucessful installation, I decided I wanted to use NSX Advanced Load Balancer (formaly known as Avi Vantage) for my load-balancer.

I re-ran the installation, entered in the NSX ALB credentials, verified it saw my cloud and networks, and continued on.

However, it didn’t matter how many times I tried to perform the installation, it would always fail without even creating the control plane VMs. The logs were lacking in clarity as to the cause.

TL;DR

After pouring over the initial documentation at https://docs.vmware.com/en/VMware-Tanzu-Kubernetes-Grid/1.5/vmware-tanzu-kubernetes-grid-15/GUID-mgmt-clusters-install-nsx-adv-lb.html, it appears VMware have missed a critical step.

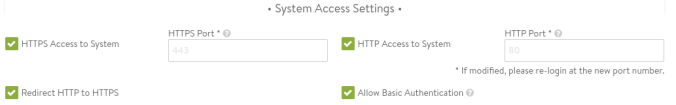

To enable the TKG installer to install and configure an endpoint provider in NSX ALB, Basic Authentcation needs to be enabled.

To do this, in ALB click on the Administration tab, then expand Settings, and click Access Settings. Click the pencil icon on the right and then check the box for Allow Basic Authentication:

Makes all the difference

Frustrating I only found this out while reading Cormac Hogan‘s blog, so kudos to him.

Automate SSL Certificate Issuing and Renewal with HashiCorp Vault Agent – Part 1: Configure Vault

One of the biggest challenges operations teams face is SSL certificate issuing and renewal. Often this is because different applications, like vendor appliances, have a complicated renewal process. Others can be because corporations simply miss the renewal date. If large enterprises like Microsoft sometimes fail at this, what hope do the rest of us have? Continue reading

One of the biggest challenges operations teams face is SSL certificate issuing and renewal. Often this is because different applications, like vendor appliances, have a complicated renewal process. Others can be because corporations simply miss the renewal date. If large enterprises like Microsoft sometimes fail at this, what hope do the rest of us have? Continue reading